Table of contents

- Questions:

- What is the Difference between an Image, Container and Engine?

- What is the Difference between the Docker command COPY vs ADD?

- What is the Difference between the Docker command CMD vs RUN?

- How Will you reduce the size of the Docker image?

- Why and when to use Docker?

- Explain the Docker components and how they interact with each other.

- Explain the terminology: Docker Compose, Dockerfile, Docker Image, Docker Container.

- In what real scenarios have you used Docker?

- Docker vs Hypervisor?

- 10. What are the advantages and disadvantages of using docker

- What is a Docker namespace?

- What is a Docker registry?

- What is an entry point?

- How to implement CI/CD in Docker?

- Will data on the container be lost when the docker container exits?

- What is a Docker swarm?

- What are the docker commands for the following:

- What are the common Docker practices to reduce the size of Docker Images?

Questions:

What is the Difference between an Image, Container and Engine?

Docker images are read-only templates containing instructions for creating a container. A Docker image creates containers to run on the Docker platform. Think of an image as a blueprint or snapshot of what will be in a container when it runs.

Docker Container is an isolated place where an application runs without affecting the rest of the system and without the system impacting the application. A Docker container is a runtime instance of a Docker image**.**

Docker Engine is an open-source containerization technology for building and containerizing your applications. Docker Engine acts as a client-server application.

What is the Difference between the Docker command COPY vs ADD?

COPY is a docker file command that copies files from a local source location to a destination in the Docker container. It only has only one assigned function.

Syntax: COPY <src><dest>

ADD command is used to copy files/directories into a Docker image. It can also copy files from a URL.

Syntax: ADD <src> <dest>

What is the Difference between the Docker command CMD vs RUN?

CMD is the command the container executes by default when you launch the built image. A Dockerfile will only use the final CMD defined. The CMD can be overridden when starting a container with docker run $image

RUN is an image build step, the state of the container after The RUN command will be committed to the container image. A Dockerfile can have many RUN steps that layer on top of one another to build the image.

How Will you reduce the size of the Docker image?

Leverage .dockerignore, smaller base images and multi-stage builds to drastically reduce the size of your Docker images.

1. Use a smaller base image: The choice of the image has a significant impact on the size of the final Docker image. Choosing a smaller base image, such as Alpine Linux, can reduce the size of the image significantly.

2. Remove unnecessary dependencies: Only install the packages that are necessary for your application to run. Removing any unnecessary dependencies can significantly reduce the size of the final Docker image.

3. Use multi-stage builds: Use multi-stage builds to only include the necessary files in the final image. This will help reduce the size of the image by discarding unnecessary files from earlier stages of the build.

4. Use smaller package managers: Use smaller package managers such as Alpine Linux instead of larger ones like Ubuntu. Alpine is a security-oriented, lightweight Linux distribution based on Busybox.

5. Use a container registry that provides automated image optimization: Use a registry that provides automated image optimization, such as Google Container Registry, AWS Elastic Container Registry or Docker Hub.

Why and when to use Docker?

Docker is used to create, deploy, and run applications using containers for portability, scalability, isolation and consistency. It provides a consistent runtime environment allows for easy application deployment and management, and facilitates scaling and load handling.

Docker makes it easy to create consistent and portable environments for applications that can run on any platform regardless of the underlying operating system.

Docker simplifies the process of packaging and distributing applications along with their dependencies, which reduces the risk of compatibility issues and errors.

Docker enables faster and more efficient development workflows by allowing developers to build, run, and debug applications locally using containers that mimic the production environment.

Docker improves the scalability and reliability of applications by allowing them to run in isolated and distributed containers that can be easily managed and orchestrated using tools such as Docker Swarm or Kubernetes.

Docker enhances the security of applications by providing features such as namespaces, cgroups, seccomp, AppArmor, etc., that limit the access and resources of containers.

Explain the Docker components and how they interact with each other.

Docker has three main components: the client, the daemon, and the registry.

The client (docker) is a command-line interface that allows users to interact with Docker. The client sends commands to the daemon (dockerd) through a REST API or a UNIX socket.

The daemon (dockerd) is a background process that runs on the host machine and manages the lifecycle of containers. The daemon also communicates with other daemons to coordinate tasks such as networking, storage, etc.

The registry (such as Docker Hub) is a service that stores and distributes images. The registry can be public or private. The daemon can pull images from or push images to a registry.

Explain the terminology: Docker Compose, Dockerfile, Docker Image, Docker Container.

Docker Compose is a tool that allows users to define and run multi-container applications using a YAML file. Docker Compose simplifies the process of configuring and managing complex applications that have multiple components or services.

Dockerfile is a text file that contains the instructions and files needed to build a Docker image. A Dockerfile can be based on another image or created from scratch using a Dockerfile. A Dockerfile can have various commands such as FROM, RUN, COPY, ADD, CMD, etc., that specify how to build an image.

Docker Image is a read-only template that contains the instructions and files needed to create a container. An image can be stored in a local or remote repository, such as Docker Hub. An image can be pulled from or pushed to a repository using docker pull or docker push commands.

Docker Container is a running instance of an image that is isolated from the host and other containers. A container can be started, stopped, restarted, killed, removed, or paused using docker commands. A container can also be modified by adding or changing files, but these changes are not persisted unless the container is committed to a new image.

In what real scenarios have you used Docker?

This question is meant to test your practical experience with Docker and how you have applied it to your projects. There is no definitive answer to this question, but you can mention some examples of how you have used Docker for:

Developing and testing applications locally using containers that mimic the production environment

Building and deploying applications using continuous integration and continuous delivery pipelines with tools such as Jenkins, Travis CI, etc.

Running microservices-based applications that have multiple components or services that communicate with each other using networks

Scaling and managing applications using container orchestration tools such as Docker Swarm or Kubernetes

Securing and isolating applications using features such as namespaces, cgroups, seccomp, AppArmor, etc.

You can also provide some details about the challenges you faced and how you solved them using Docker.

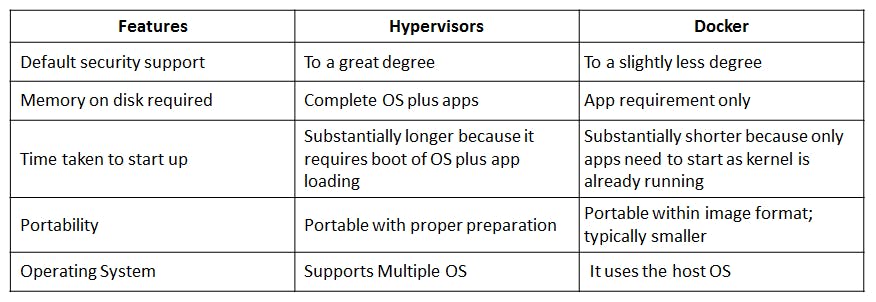

Docker vs Hypervisor?

Docker and hypervisor are two different technologies that enable virtualization. Virtualization is the process of creating a software-based version of something (such as compute storage, servers, application, etc.) from a single physical hardware system.

The main difference between Docker and hypervisor is:

Docker uses container-based virtualization, which creates lightweight virtual environments at the application layer. Containers share the same operating system kernel as the host machine and use its resources directly. Containers are faster to start and stop, consume less memory and CPU, and have better performance than hypervisors.

Hypervisor uses hardware-based virtualization, which creates full-fledged virtual machines at the hardware layer. Virtual machines have their own operating system kernel and emulate the hardware devices of the host machine. Virtual machines are slower to start and stop, consume more memory and CPU and have lower performance than containers.

The following diagram illustrates the difference between Docker and hypervisor:

10. What are the advantages and disadvantages of using docker

Docker has both advantages and disadvantages, depending on your needs and preferences. Some of the advantages of using docker are:

It allows you to create consistent and portable environments for applications that can run on any platform regardless of the underlying operating system.

It simplifies the process of packaging and distributing applications along with their dependencies, which reduces the risk of compatibility issues and errors.

It enables faster and more efficient development workflows by allowing developers to build, run, and debug applications locally using containers that mimic the production environment.

It improves the scalability and reliability of applications by allowing them to run in isolated and distributed containers that can be easily managed and orchestrated using tools such as Docker Swarm or Kubernetes.

It enhances the security of applications by providing features such as namespaces, cgroups, seccomp, AppArmor, etc., that limit the access and resources of containers.

Some of the disadvantages of using docker are:

It is still a relatively new technology that is evolving rapidly and may have some missing features, bugs, or compatibility issues with some systems or platforms.

It requires a learning curve to master its concepts, commands, and best practices, especially for beginners or non-technical users.

It may introduce some performance overhead due to overlay networking, interfacing between containers and the host system, etc., which may affect some applications that require high performance or low latency.

It may pose some challenges for data persistence and backup, as containers are ephemeral and do not store data unless they are attached to volumes or bind mounts. Data in containers may be lost when the container exits or is removed unless it is committed to a new image or backed up externally.

What is a Docker namespace?

A Docker namespace is a feature that provides process isolation within a container. It creates separate namespaces for various resources such as process IDs, network interfaces, file systems, and more.

Each namespace operates independently, allowing processes within a container to have an isolated view of these resources. This isolation prevents conflicts and ensures that processes running inside the container do not interfere with processes in other containers or the host system.

What is a Docker registry?

A Docker registry is a repository that stores and distributes Docker images. It can be public or private. The default registry is Docker Hub, which is a public registry that hosts thousands of official and community images. Users can also create their private registries using tools such as Docker Registry or Harbor. A Docker registry allows users to:

Push and pull images to and from the registry.

Tag and label images to identify them.

Search and browse images in the registry.

Manage access and security policies for images in the registry

What is an entry point?

An entry point in Docker is the command that's executed when a container starts. It's specified in the Dockerfile and can be overridden at runtime. It's often used to set the default process for the container.

How to implement CI/CD in Docker?

Create a Dockerfile to define the image build process and environment.

Set up a version control system (e.g., Git) to manage your codebase.

Configure a CI/CD tool (e.g., Jenkins, GitLab CI/CD, CircleCI) to monitor the repository for changes.

Use the CI/CD tool to build the Docker image, run tests, and push the image to a Docker registry.

Configure deployment pipelines to deploy the Docker image to your target environments (e.g., staging, production) using container orchestration tools like Kubernetes or Docker Compose.

Will data on the container be lost when the docker container exits?

Yes, by default, any data that is created or modified inside a container will be lost when the container exits or is removed. This is because the container’s file system is ephemeral and only exists for the lifetime of the container. To persist data beyond the container’s lifecycle, there are two options:

Using Docker volumes, which are directories that are created and managed by Docker outside the container’s file system. They can be mounted to one or more containers and can store data even after the containers are stopped or deleted.

Using bind mounts, which are directories that are located on the host machine and can be mounted to a container. They allow the container to access and modify data on the host machine.

What is a Docker swarm?

A Docker swarm is a cluster of Docker nodes that can be managed as a single entity. It allows users to orchestrate and scale multiple containers across multiple machines using simple commands. A Docker swarm consists of:

Manager nodes: The nodes that are responsible for maintaining the state and configuration of the swarm. They also accept commands from the user and delegate tasks to the worker nodes.

Worker nodes: The nodes that execute the tasks assigned by the manager nodes. They run the containers and report their status to the manager nodes.

Services: The units of work that define how many containers of a certain image should run in the swarm and how they should behave.

Tasks: The atomic units of work that represent a single container running in the swarm.

What are the docker commands for the following:

view running containers:

docker pscommand to run the container under a specific name:

docker run --name <container_name> <image_name>command to export a docker:

docker export <container_id> > <file_name>.tarcommand to import an already existing docker image:

docker import <file_name>.tar <image_name>commands to delete a container:

docker rm <container_id>ordocker rm -fcommand to remove all stopped containers, unused networks, build caches, and dangling images?:

docker system pruneWhat are the common Docker practices to reduce the size of Docker Images?

Some of the common Docker practices to reduce the size of Docker Images are:

Using a smaller base image or an alpine image that has minimal packages installed.

Combining multiple RUN commands into one reduces the number of layers.

Removing unnecessary files or packages after installing them with RUN commands.

Using multi-stage builds to separate the build and runtime environments.

Using .dockerignore file to exclude unwanted files from the build context.

Happy Learning!!!!!!!!